Windows Communication Foundation – Consuming a WCF service with ChannelFactory

June 13, 2012 3 Comments

This series of posts will talk about Windows Communication Foundation, starting with an introduction into the technology and how to use it in you project, moving onto more advanced topics such as using an IOC container to satisfy dependencies in your services.

Posts in this series:

- An Introduction to Windows Communication Foundation – Creating a basic service

- Windows Communication Foundation – Consuming a WCF service with ChannelFactory

- Windows Communication Foundation – Resolving WCF service dependencies with Unity

- Windows Communication Foundation – Resolving MVC client WCF ChannelFactory dependencies with Unity

In An Introduction to WCF I talked a little about what WCF is and how it can be used, as well as creating a simple WCF service. In this post I want talk about how to consume WCF services in the most common situations.

There are two common scenarios when consuming WCF services; consuming a WCF service from a non WCF client via a proxy, and consuming a WCF service from a WCF client via a shared contract with ChannelFactory.

Consuming a WCF service from a non WCF client via a service proxy

A service proxy acts as an interface between the client and the service. It means that the client does not need to know anything about the implementation of the service other than the operations that are exposed. A downside to using a proxy is that if the interface of the service changes then the proxy will need to be regenerated. Using a service proxy will be necessary if you do not control the service you wish to consume.

To add a service proxy you can either use svcutil.exe or let Visual Studio generate the proxies for you (which uses svcutil.exe underneath) by right clicking on a project and selecting Add Service Reference.

I don’t really want to dwell on service proxies too much, as there is lots of information available already and it is not really very interesting. There is another way to consume a WCF service if you have more control over the service and are using WCF services to provide some separation of concerns in your application.

Consuming a WCF service from a WCF client using ChannelFactory

A real world example is probably the easiest way to describe the type of situation in which this approach is useful. Lets say I am creating an ASP.Net MVC application and I want to have some separation in the layers. Along side the MVC client I want to have a repository to take care of the persistence side of things and a service layer to take care of the business logic so that I can call a service method in the Controller of the client that will perform some operation and maybe talk to the repositories.

It is of course possible to have the services in-process, and for a small application it may be a sensible solution. When moving to a larger application it may be prudent make the service layer true services that can be deployed on a separate server (or server farm) to aid scalability and security. WCF is a ideal technology for these services. In this instance the WCF services are only to be used by this particular application and so we can use some more WCF functionality to remove the need to keep generating service proxies. You will definitely appreciate this as you develop your application. Regenerating proxies gets a little tiresome after the hundredth time.

To use ChannelFactory it is necessary that both client and service host share the same operation and data contracts. Usually this means that the service interfaces (operation contracts) and data contracts are separated into separate assemblies so they can be shared between projects.

What is ChannelFactory anyway?

According to MSDN it is “A factory that creates channels of different types that are used by clients to send messages to variously configured service endpoints”. WCF uses a Channel Model layered communication stack to communicate between endpoints. As with other stacks such as TCP/IP the Channel stack provides an abstraction of the communication between the corresponding layer for the sending and receiving stack.

The stack provides an abstraction for how the message is delivered, the protocol to be used and other features such as reliability and security. Messages flow through the Channel stack and are transformed by a particular Channel, for example the transport channel transforms the message into the required communication format. Above that the protocol channels might encrypt the message or add headers. It is the ChannelFactory that is used to create the channel stack for a particular binding. For more information on the Channel Model see this MSDN article.

Using ChannelFactory to call a service

In the previous post I created a simple Product service with a single operation. I am going to extend that example to add an MVC client that uses ChannelFactory to consume the service. I will break the process into a number of steps to make it easy to follow.

In the previous post I created a simple Product service with a single operation. I am going to extend that example to add an MVC client that uses ChannelFactory to consume the service. I will break the process into a number of steps to make it easy to follow.

1) Reorganise the solution to separate the interface and data contracts

I have moved the service interface and the data contracts into separate assemblies so they can be shared with the client. As mentioned briefly in the previous post, the data contracts would usually be Data Transfer Objects and not domain entities. For this example I have used domain entities an data contracts for ease. I have also added an Asp.Net MVC 4 project that will act as the client and consume the WCF service.

2) Add the WCF service model configuration to the client Web.config

Just as we added some configuration to the Web.config of the service project to let WCF know how we want the service to work, we also need to add some similar configuration to the client Web.config.

<system.serviceModel> <client> <endpoint address="http://localhost:53313/ProductService.svc" binding="wsHttpBinding" contract="WcfServiceApplication.Contract.IProductService" name="WSHttpBinding_IProductService"/> </client> </system.serviceModel>

We can once again see the ABC of WCF. The address is the URL of the service which I have set this to the URL of the Service when it is running0 from Visual Studio. When the client is deployed the address needs to be changed accordingly. The binding needs to be the same as the binding of the service you want to consume. The contract is the service contract from the shared assembly. Finally a name is used so ChannelFactory can get the configuration details by name.

3) Use ChannelFactory to call the service

As this is an MVC application, a service would generally be called from a controller. I have created a ProductController and a couple of views. The simple application works like this: a user enters a Product Id and selects to search. The product details are then displayed. The controller action that call the service looks like this:

public ActionResult View(int productId) { var factory = new ChannelFactory<IProductService>("WSHttpBinding_IProductService"); var wcfClientChannel = factory.CreateChannel(); var result = wcfClientChannel.GetProductById(productId); var model = new ViewProductModel { Id = result.Id, Name = result.Name, Description = result.Description }; return View(model); }

Firstly, create a new factory for the service contract required. The constructor takes the name of an endpoint from the configuration. A channel is then created based on the service contract and the named endpoint configuration. It is then possible to call an operation on the channel and return some results. I have then used the results in the model for the view.

Lets have a look at the MVC client application in action. I am not going to win any awards for design…

Lets search for a product:

And view the results:

Conclusion and next steps

As you can see from the above example, using ChannelFactory is a very easy way of calling a service when you have access to the service and data contracts. However if you were calling multiple services from a controller it would be better to get some reuse and not have to keep creating the factory and channel over and over again. It is possible to create a helper method to control the creation and use of factories but there is a better way.

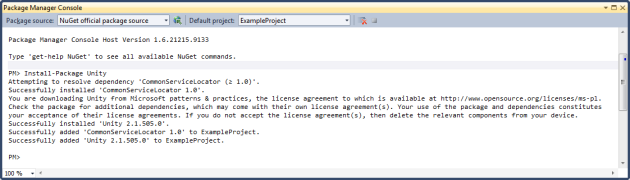

You are probably already using Dependency Injection in your application via an Inversion of Control container such as Castle Windsor or Unity. It turns out that is it very easy to inject dependencies into service and also to inject a channel directly to where it is needed in your application. In the next post I will look at injecting dependencies into the service implementation.

Posts in this series:

- An Introduction to Windows Communication Foundation – Creating a basic service

- Windows Communication Foundation – Consuming a WCF service with ChannelFactory

- Windows Communication Foundation – Resolving WCF service dependencies with Unity

- Windows Communication Foundation – Resolving MVC client WCF ChannelFactory dependencies with Unity